A friend recently asked me what I, as an experienced engineer, thought of the latest AI coding agents. I told him I was having an incredible amount of fun.

After sharing some of what I’ve been working on, I said: “This is all like crack for people with more ideas than time.”

I don’t know how many people are like this, but the AI boom has done strange things to my mind. I love starting and building new projects. I’ve built a lot, but I haven’t shared most of them. Rarely do I actually complete a project. I’ve learned an incredible amount during this time and it feels like it’s still accelerating.

Below is a list of projects over the last couple years, fueled by the AI boom.

The Bot (2020-2023)

My time at GitHub had me interested in the utility of having a bot, available on chat, for managing your life. Hubot’s design principles tended towards being as human-like as possible. Plain english commands. Friendly responses. So I started building my own version to interact with over Telegram. I called it Dualla.

Dualla could send me photos from my security cameras, take notes, interact with Home Assistant and tell me “Good Morning.” It was a fun project. Then AI happened and I went down a rabbit hole of ReACT, RAG, and too many things to keep a system working.

It was too early.

The Robot (2022-2024)

Yak API is a middleware layer for rovers. It presents as an HTTP API for interacting with your robot, likely running on a Raspberry Pi. I built it as an anti-ROS. This project really started to cook with early LLM tab completes.

The Coding Agent That Couldn’t Count (2023)

My thesis was that managing context windows was the major blocker for coding agents. Getting the harness right and building tools that would provide enough tokens to get good answers but stay under the then-small limit of 4k tokens.

I wired up a harness optimized for using ed, thinking the model would be good

at line editing and browsing code through this line-oriented interface.

It was not. gpt-3.5-turbo was really bad at dealing with line numbers and counting.

The Coder project didn’t work out, but the agent loop itself was interesting. I

spun it out into a Go library I still use for all my projects. The core design

principle takes inspiration from net/http in that everything is wrappers

around:

type CompletionFunc func(context.Context, []*Message, []ToolDef) (*Message, error)

The Self-Hosting Arc (2024)

As part of my self-hosted software experiments, I was re-acquainting myself with the Vagrant VM tool. I’ve always hated tools like Puppet and Ansible for provisioning, so of course I wrote a Bash-based “convention” for scripting my VMs. I called it VagrantInit.

Around the same time I was briefly interested in RAG, Vector Search, and the core problem of “chunking” documents for indexing. I looked at an implementation in some early open source project, written in Python, and thought… my god, this is so inefficient. I built Chunker in Go. The core thesis was that efficient string handling would be way better than what was happening in Python. And it was. I also integrated treesitter so code could be intelligently indexed.

I never found a use for any of it.

Then one night I watched the movie Furiosa and was tickled by a scene where the local tribal leader asks for his “History Man” for a “word burger.” I needed more of them so I built a website to do it. My first self-hosted, publicly available project.

The Winter of MCP (December 2024)

I woke up one morning with this idea of an LLM interacting with an application on my computer. So I wired up a system where, through MCP (which had just come out), Claude could create and interact with an Electron application it built locally. We built a Tic-Tac-Toe game where I could play directly against Claude.

Claude is terrible at Tic-Tac-Toe.

2025: Heating Up

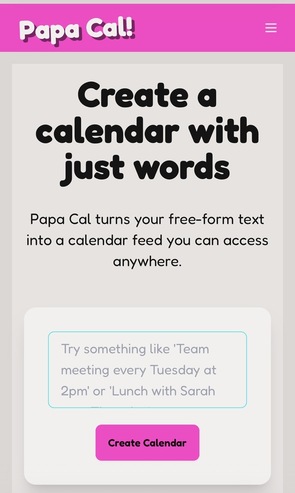

Papa Cal (March 2025). By March of that school year I was so overwhelmed with updates coming out of my kids’ school I thought maybe I could use AI to solve that. The idea is you can just type out your calendar, or copy and paste from a newsletter, or take a picture. Papa Cal creates a calendar feed you add to your calendar. It’s pretty nice, try it out.

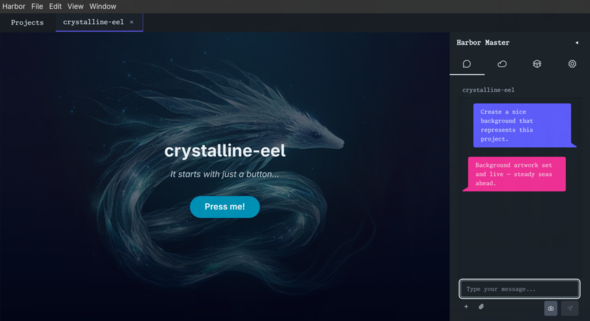

Harbor (June 2025). After the Electron MCP adventure I was still intrigued by the idea of an LLM building and interacting with an application. Harbor is a local app where you create projects, each with a chat interface. You tell it what you want and it builds it. By having a tightly controlled application environment, the coding agent has an optimized feedback loop – responding to javascript errors, taking its own screenshots, running the whole show.

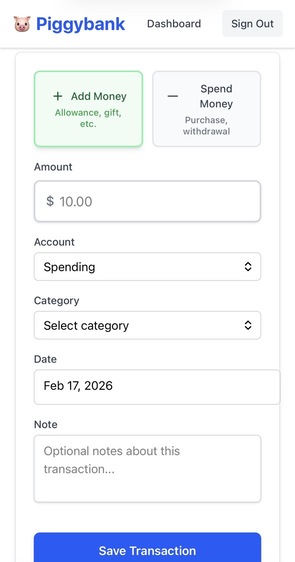

Piggybank (June 2025). My three kids receive cash gifts from Grandma for birthdays and Christmas. I’m supposed to keep track of their balance? Not anymore. Now they have their own bank. Rails.

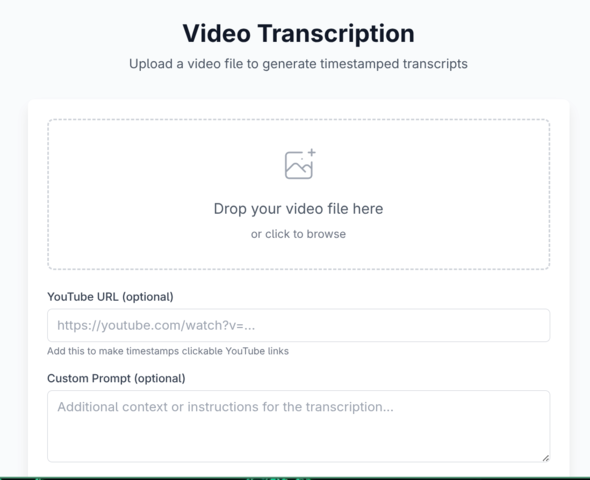

Transcript (June 2025). A very early, nearly pure-vibe-coded application I built for my wife to generate transcripts for videos. Drag and drop a video, the audio gets stripped, sent to Whisper for a raw transcript, then an LLM post-processes it into something readable. Python.

Fall 2025-Present: Takeoff

Claude Opus 4.5 came out on November 24, 2025.

SSHAmp (November 2025). I thought it would be cool to have a VM where when you SSH in, it immediately drops you into a coding agent. Everything on the system managed by AI. I was unable to get it working the way I wanted because of struggles with Vagrant and Packer. But the idea stuck with me.

Specter (December 2025). The key idea that takes you from coding agents generating a bunch of code to having them produce working code is feedback. I had a TUI project where the feedback loop wasn’t working – the agent couldn’t see the problem. So I built Specter to run as a virtual TTY and take screenshots so the agent can see what it’s doing.

Yo dawg, I heard you like TUIs in your TUIs, so I built a tool for your coding agent TUI to run TUIs. pic.twitter.com/xpXK1pyjKV

— Rhett Garber (@rhettford) November 26, 2025

Universal API (December 2025). A thought-leader mentioned how LLMs change the nature of APIs because they can translate unstructured content into structured. I took this literally and built an application server where you upload any file and query it using any API you can think up. The LLM simply figures it out. A month later, Cloudflare lets you fetch any website as markdown.

KLNK (January 2026). After moving to Omarchy I started listening to my old MP3s. All the players are boring. So I had the idea of a custom radio station with an AI DJ. This started as a one-day project to see how far I could push vibe coding. It became more of an Odyssey. I’m listening to it right now.

This is so close to working. My very own radio DJ. pic.twitter.com/G3uRuoeHzt

— Rhett Garber (@rhettford) January 28, 2026

Tank (February 2026). My earlier failure with SSHAmp had me wondering if there was a better way to build VMs. Building on VagrantInit, I thought about how nice it would be to define VMs in cacheable layers on the filesystem where I could share and reuse layers between projects. Now all my VMs are managed by Tank.

Hydra (February 2026). OpenClaw exploded into popularity over the past few weeks. The maintainer was just acquired by OpenAI. I’ve been working with AI personal assistants since Dualla in 2020. Time to bring it back? I had some ideas on how such a system should be built. OpenClaw doesn’t appear to be built that way, so this is my chance to offer a different take.

Can you feel it?