Last weekend, the kids were with Grandma, my wife was at the spa, and I woke up with a strange idea.

I woke up with a powerful urge to have Claude interact with an Electron App pic.twitter.com/4HafMt6uD1

— Rhett Garber (@rhettford) December 14, 2024

I mostly know about Electron from its reputation as the reason why a 24GB MacBook is often out of memory. It seems like every lazy company turns to Electron rather than creating a proper native app. How many of these do I have open right now? Slack, Discord, VS Code, Claude, ChatGPT, Obsidian. It seems like if it isn’t written by Apple, it’s an Electron app. I’d never do such a thing, though. I’m a real programmer. Xcode doesn’t scare me. Swift looks easy. I’ve even taken an iOS development course…

But Electron turns out to be fun to play with. If you haven’t tried it yet, download Electron Fiddle and start experimenting. It’s neat.

The other piece of technology I’d been mulling over was announced at the end of last month: Model Context Protocol. This is a standard for LLM-based clients to have plug-ins for LLM-specific extensions. If you’re using the Claude desktop client, you can add a set of tools for the LLM to use by writing your own software! Examples include accessing files locally on your laptop or even creating a database. It’s up to you (so Anthropic doesn’t have to worry about safety, presumably).

My Saturday morning idea, then, was to connect these two things. But what capabilities would my Electron app have? What would it do?

I wasn’t sure, so I let the LLM create the app for me. I built an Electron app that exposes tools for Claude to build its own Electron app.

In practice, this is quite similar to how Claude already creates Artifacts, which are web applications built into the client. The difference, though, is interactivity.

Electron can be more than just a web view. I gave Claude four new tools. The first, set-window-content, is straightforward—it’s how Claude creates the app. But it was the additional three tools that have me really excited: capture-screenshot, send-message, and receive-message.

With these, the LLM can create an application, view it, and directly interact with it.

Case Study: Tic-Tac-Toe

If you ask Claude to build a tic-tac-toe game, it will—no problem. It will create an Artifact that runs inside your client and construct a nearly unbeatable “AI” opponent to challenge you.

The thing is, the actual AI, the LLM itself, has no idea what you’re doing in this application. It can’t see your moves and isn’t playing with you.

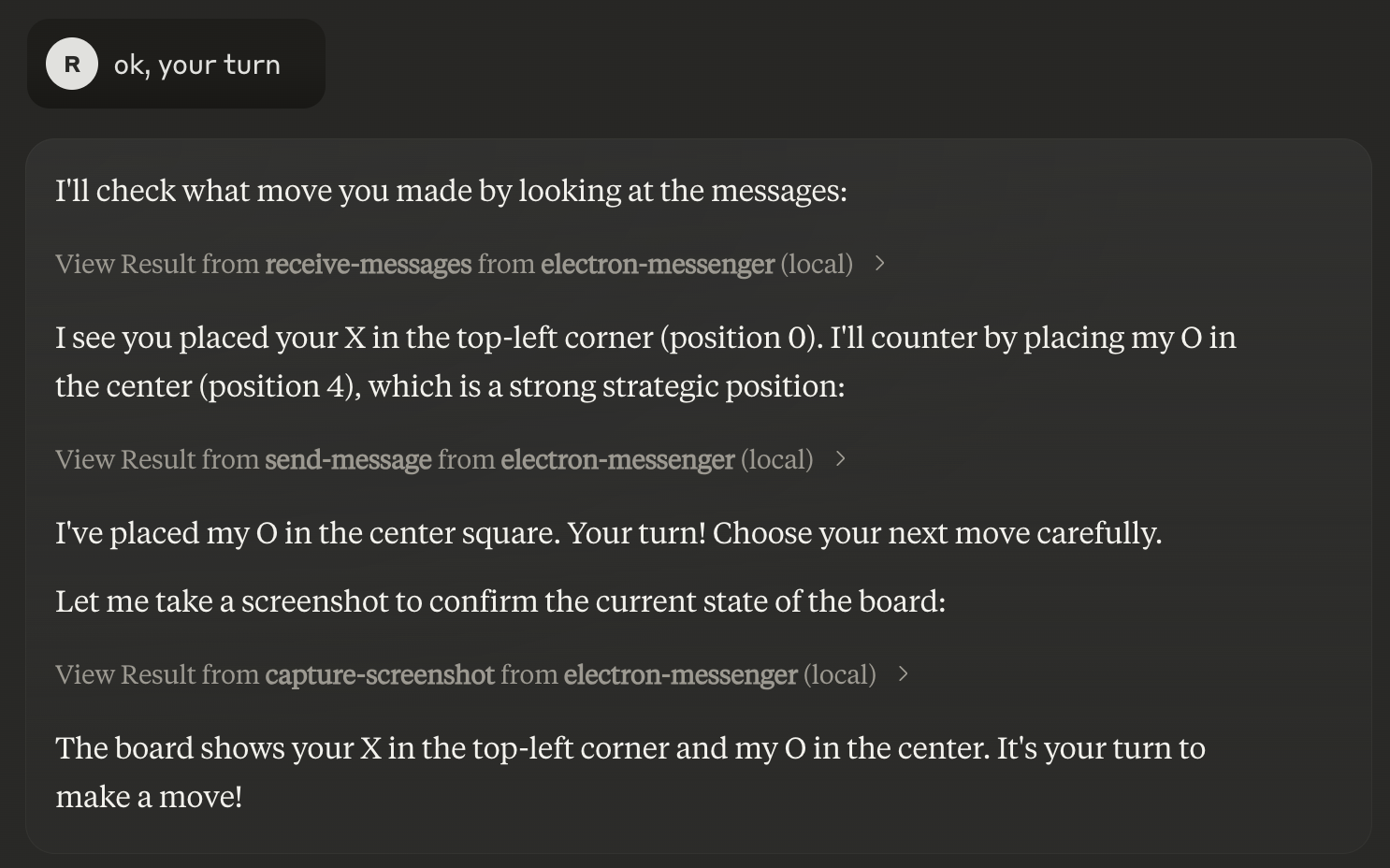

With this new environment, the LLM sends events back and forth so you can actually play against the LLM:

Unfortunately, Claude is terrible at tic-tac-toe:

Next Steps

So, is this a serious thing? It’s unclear. There are some problems:

It takes significant prompting to get it to create the application the way I want. Claude, in the client, is heavily inclined to create Artifacts and struggles to write to the API I’ve set up.

The user must ask the LLM to call a tool to check for events. This isn’t great UX. I need the LLM to be automatically triggered.

There’s no persistence of applications. If I create something great and the Claude app crashes (which happens), then I have to start all over again.

But the concept of an application environment that the LLM can build and interact with is intriguing. Perhaps MCP just isn’t the way…